- 14 min learn

- Performance,

Optimization,

Tools,

Ways

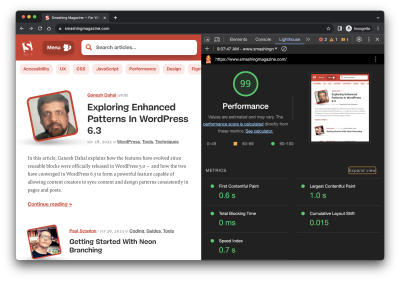

Working a performance test to your quandary isn’t too terribly complicated. It will probably well per chance also fair even be one thing you pause progressively with Lighthouse in Chrome DevTools, the save checking out is freely accessible and produces a in actuality handsome-having a glimpse file.

Lighthouse is greatest one performance auditing tool out of many. The consolation of getting it tucked into Chrome DevTools is what makes it a in point of fact uncomplicated budge-to for many builders.

However pause you admire how Lighthouse calculates performance metrics devour First Contentful Paint (FCP), Full Blocking off Time (TBT), and Cumulative Layout Shift (CLS)? There’s a handy calculator linked up within the file summary that helps you to adjust performance values to uncover how they influence the overall ranking. Peaceful, there’s nothing in there to whine us in regards to the solutions Lighthouse is the utilize of to clutch into legend metrics. The linked-up explainer provides extra predominant aspects, from how rankings are weighted to why rankings also can fair fluctuate between test runs.

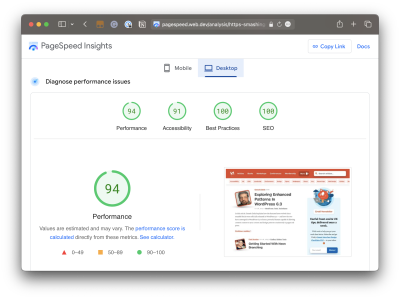

Why will we need Lighthouse at all when Google moreover provides identical experiences in PageSpeed Insights (PSI)? The actual fact is that the 2 instruments were quite definite unless PSI changed into up so far in 2018 to make utilize of Lighthouse reporting.

Did you glimpse that the Performance ranking in Lighthouse is diversified from that PSI screenshot? How can one file consequence in a conclude to-excellent ranking whereas the diversified seems to be to search out extra reasons to lower the ranking? Shouldn’t they be the the same if each and each experiences rely on the the same underlying tooling to generate rankings?

That’s what this text is about. Diversified instruments manufacture diversified assumptions the utilize of diversified info, whether or no longer we are talking about Lighthouse, PageSpeed Insights, or industrial companies and products devour DebugBear. That’s what accounts for diversified results. However there are extra specific reasons for the divergence.

Let’s dig into these by answering a chain of total questions that pop up for the length of performance audits.

What Does It Imply When PageSpeed Insights Says It Uses “Precise-User Journey Files”?

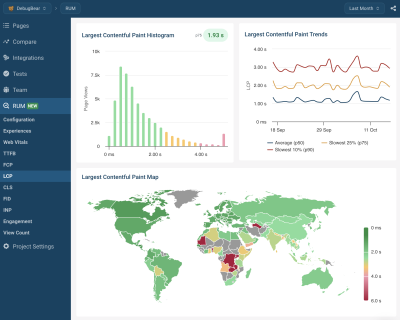

Right here’s a huge ask on legend of it provides a lot of context for why it’s imaginable to gain varying results from diversified performance auditing instruments. Essentially, when we deliver “real particular person info,” we’re in actuality relating to two diversified varieties of information. And when discussing the 2 varieties of information, we’re in actuality talking about what’s named real-particular person monitoring, or RUM for short.

Sort 1: Chrome User Journey Dispute (CrUX)

What PSI manner by “real-particular person trip info” is that it evaluates the performance info old style to measure the core internet vitals from your assessments in opposition to the core internet vitals info of real real-life customers. That real-life info is pulled from the Chrome User Journey (CrUX) file, a chain of anonymized info silent from Chrome customers — no longer much less than these who maintain consented to allotment info.

CrUX info is predominant on legend of it’s how internet core vitals are measured, which, in turn, are a ranking notify for Google’s search results. Google specializes within the 75th percentile of customers within the CrUX info when reporting core internet vitals metrics. This methodology, the solutions represents an monumental majority of customers whereas minimizing the chance of outlier experiences.

However it absolutely comes with caveats. As an illustration, the solutions is quite unhurried to update, refreshing every 28 days, which manner it’s no longer the associated to real-time monitoring. At the the same time, even as you happen to thought on the utilize of the solutions your self, you may well per chance per chance also fair fetch your self restricted to reporting within that floating 28-day differ unless you manufacture utilize of the CrUX History API or BigQuery to homicide ancient results you may well per chance per chance also measure in opposition to. CrUX is what fuels PSI and Google Search Console, nonetheless it absolutely is moreover accessible in diversified instruments you may well per chance per chance also fair already utilize.

Barry Pollard, a internet performance developer advocate for Chrome, wrote an critical primer on the CrUX Dispute for 4nds Journal.

Sort 2: Fleshy Precise-User Monitoring (RUM)

If CrUX provides one taste of real-particular person info, then we can clutch into legend “beefy real-particular person info” to be one other taste that provides even extra within the methodology particular particular person experiences, such as specific network requests made by the page. This info is definite from CrUX on legend of it’s silent as we declare by the internet internet site proprietor by inserting in an analytics snippet on their internet internet site.

Unlike CrUX info, beefy RUM pulls info from diversified customers the utilize of diversified browsers as well to to Chrome and does so on a true basis. Which manner there’s no ready 28 days for a novel save of information to uncover the influence of any adjustments made to a quandary.

You might well per chance also uncover the methodology you may well per chance wind up with diversified ends in performance assessments simply by the manufacture of real-particular person monitoring (RUM) that is in utilize. Both forms are precious, nonetheless

Does Lighthouse Expend RUM Files, Too?

It doesn’t! It makes utilize of synthetic info, or what we assuredly name lab info. And, upright devour RUM, we can existing the theorem that of lab info by breaking it up into two diversified forms.

Sort 1: Observed Files

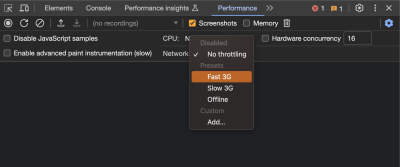

Observed info is performance as the browser sees it. So, in its save monitoring real info silent from real customers, observed info is extra devour defining the test stipulations ourselves. As an illustration, we also can add throttling to the test ambiance to put into effect an artificial situation the save the test opens the page on a slower connection. You might well per chance imagine it devour racing a automobile in digital fact, the save the stipulations are made up our minds in advance, rather than racing on a live discover the save stipulations also can fair vary.

Sort 2: Simulated Files

Whereas we known as that closing manufacture of information “observed info,” that is no longer an legit substitute time frame or anything else. It’s extra of a predominant tag to support distinguish it from simulated info, which describes how Lighthouse (and masses of diversified instruments that include Lighthouse in its characteristic save, such as PSI) applies throttling to a test ambiance and the outcomes it produces.

The clarification for the excellence is that there are diversified ways to throttle a network for locating out. Simulated throttling begins by gathering info on a immediate internet connection, then estimates how snappy the page would maintain loaded on a definite connection. The high consequence is a worthy faster test than it might per chance per chance well per chance also be to practice throttling old to gathering info. Lighthouse can assuredly rob the outcomes and calculate its estimates faster than the time it might per chance per chance well per chance clutch to assemble the solutions and parse it on an artificially slower connection.

Simulated And Observed Files In Lighthouse

Simulated info is the solutions that Lighthouse makes utilize of by default for performance reporting. It’s moreover what PageSpeed Insights makes utilize of because it’s powered by Lighthouse below the hood, though PageSpeed Insights moreover depends on real-particular person trip info from the CrUX file.

On the assorted hand, it’s moreover imaginable to acquire observed info with Lighthouse. This info is extra legit because it doesn’t depend on an incomplete simulation of Chrome internals and the network stack. The accuracy of observed info depends on how the test ambiance is determined up. If throttling is applied on the working machine stage, then the metrics match what a real particular person with these network stipulations would trip. DevTools throttling is much less complicated to save, nonetheless doesn’t accurately replicate how server connections work on the network.

Boundaries Of Lab Files

Lab info is mainly restricted by the very fact that it greatest looks at a single trip in a pre-defined ambiance. This ambiance assuredly doesn’t even match the practical real particular person on the internet internet site, who also can fair maintain a faster network connection or a slower CPU. Continuous real-particular person monitoring can in actuality whine you the blueprint customers are experiencing your internet internet site and whether or no longer it’s snappy sufficient.

So why utilize lab info at all?

Google CrUX info greatest experiences metric values without a debug info telling you toughen your metrics. In difference, lab experiences maintain a lot of diagnosis and solutions about toughen your page breeze.

Why Is My Lighthouse LCP Derive Worse Than The Precise User Files?

It’s a minute more uncomplicated to existing diversified rankings now that we’re familiar with the diversified varieties of information old style by performance auditing instruments. We now know that Google experiences on the 75th percentile of real customers when reporting internet core vitals, which contains LCP.

“By the utilize of the 75th percentile, everyone is conscious of that just about all visits to the quandary (3 of 4) skilled the target stage of performance or greater. Additionally, the 75th percentile price is much less inclined to be suffering from outliers. Returning to our example, for a quandary with 100 visits, 25 of these visits would must file substantial outlier samples for the price on the 75th percentile to be suffering from outliers. Whereas 25 of 100 samples being outliers is seemingly, it’s worthy much less likely than for the 95th percentile case.”

On the flip aspect, simulated info from Lighthouse neither experiences on real customers nor accounts for outlier experiences within the the same methodology that CrUX does. So, if we were to save heavy throttling on the CPU or network of a test ambiance in Lighthouse, we’re in actuality embracing outlier experiences that CrUX might well per chance otherwise toss out. As a consequence of Lighthouse applies heavy throttling by default, the is that we gain a worse LCP ranking in Lighthouse than we pause PSI simply on legend of Lighthouse’s info effectively looks at a unhurried outlier trip.

Why Is My Lighthouse CLS Derive Better Than The Precise User Files?

Correct so we’re on the the same page, Cumulative Layout Shift (CLS) measures the “visible steadiness” of a page structure. Whenever you’ve ever visited a page, scrolled down it a minute bit old to the page has fully loaded, after which observed that your suppose on the page shifts when the page load is total, then you admire precisely what CLS is and the blueprint it feels.

The nuance right here has to pause with page interactions. All people is conscious of that real customers are able to interacting with a page even old to it has fully loaded. Right here’s a immense deal when measuring CLS on legend of structure shifts assuredly occur lower on the page after a particular person has scrolled down the page. CrUX info is excellent right here on legend of it’s primarily based mostly on real customers who would pause the form of thing and undergo the worst results of CLS.

Lighthouse’s simulated info, meanwhile, does no such thing. It waits patiently for the beefy page load and never interacts with elements of the page. It doesn’t scroll, click on, faucet, flit, or have interaction in any methodology.

Right here is why you’re extra inclined to assemble a lower CLS ranking in a PSI file than you’d gain in Lighthouse. It’s no longer that PSI likes you much less, nonetheless that the true customers in its file are an even bigger reflection of how customers have interaction with a page and usually have a tendency to trip CLS than simulated lab info.

Why Is Interaction to Next Paint Lacking In My Lighthouse Dispute?

Right here is one other case the save it’s functional to know the diversified varieties of information old style in diversified instruments and the blueprint that info interacts — or no longer — with the page. That’s on legend of the Interaction to Next Paint (INP) metric is all about interactions. It’s real there within the establish!

The actual fact that Lighthouse’s simulated lab info doesn’t have interaction with the page is a dealbreaker for an INP file. INP is a measure of the latency for all interactions on a given page, the save the top seemingly latency — or conclude to it — informs the closing ranking. As an illustration, if a particular person clicks on an accordion panel and it takes longer for the protest material within the panel to render than any diversified interaction on the page, that’s what gets old style to clutch into legend INP.

So, when INP becomes an legit core internet vitals metric in March 2024, and likewise you glimpse that it’s no longer showing up on your Lighthouse file, you’ll know precisely why it isn’t there.

Gift: It is imaginable to script particular person flows with Lighthouse, along with in DevTools. However that potentially goes too deep for this text.

Why Is My Time To First Byte Derive Worse For Precise Users?

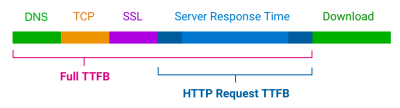

The Time to First Byte (TTFB) is what as we declare comes to thoughts for many of us when energetic on page breeze performance. We’re talking in regards to the time between setting up a server connection and receiving the fundamental byte of information to render a page.

TTFB identifies how snappy or unhurried a internet server is to respond to requests. What makes it special within the context of core internet vitals — even supposing it’s no longer thought a pair of core internet needed itself — is that it precedes all diversified metrics. The secure server wishes to save a connection in uncover to assemble the fundamental byte of information and render the entire lot else that core internet vitals metrics measure. TTFB is in actuality an indication of how snappy customers can navigate, and core internet vitals can’t happen without it.

You might well per chance already uncover the save right here’s going. After we launch talking about server connections, there are going to be differences between the methodology that RUM info observes the TTFB versus how lab info approaches it. As a consequence, we’re breeze to gain diversified rankings primarily based mostly on which performance instruments we’re the utilize of and by which ambiance they are. As such, TTFB is extra of a “tough info,” as Jeremy Wagner and Barry Pollard existing:

“Websites vary in how they affirm protest material. A low TTFB is predominant for getting markup out to the client as quickly as imaginable. On the assorted hand, if a domain delivers the initial markup snappy, nonetheless that markup then requires JavaScript to populate it with meaningful protest material […], then reaching the bottom imaginable TTFB is basically predominant so as that the client-rendering of markup can occur sooner. […] Right here is why the TTFB thresholds are a “tough info” and will might well per chance maintain to be weighed in opposition to how your quandary delivers its core protest material.”

So, if your TTFB ranking comes in elevated when the utilize of a tool that depends on RUM info than the ranking you gather from Lighthouse’s lab info, it’s potentially attributable to caches being hit when checking out a selected page. Or per chance the true particular person is coming in from a shortened URL that redirects them old to connecting to the server. It’s even imaginable that a real particular person is connecting from a suppose that is in actuality far from your internet server, which takes a minute extra time, particularly even as you happen to’re no longer the utilize of a CDN or operating edge capabilities. It in actuality depends on each and each the particular person and the methodology you support info.

This article has already introduced about a of the nuances energetic when gathering internet vitals info. Diversified instruments and info sources assuredly file diversified metric values. So which ones can you belief?

When working with lab info, I counsel preferring observed info over simulated info. However you’ll uncover differences even between instruments that all affirm high quality info. That’s on legend of no two assessments are the the same, with diversified test places, CPU speeds, or Chrome versions. There’s no one real price. As an various, you may well per chance per chance also utilize the lab info to establish optimizations and uncover how your internet internet site adjustments over time when examined in a fixed ambiance.

Indirectly, what you will need must search round at is how real customers trip your internet internet site. From an SEO standpoint, the 28-day Google CrUX info is the gold traditional. On the assorted hand, it gained’t be dazzling even as you happen to’ve rolled out performance improvements over the previous couple of weeks. Google moreover doesn’t file CrUX info for some high-internet site visitors pages on legend of the buddies also can fair no longer be logged in to their Google profile.

Installing a custom RUM solution to your internet internet site can solve that notify, nonetheless the numbers gained’t match CrUX precisely. That’s on legend of friends the utilize of browsers diversified than Chrome are now integrated, as are customers with Chrome analytics reporting disabled.

At closing, whereas Google specializes within the quickest 75% of experiences, that doesn’t mean the 75th percentile is the dazzling number to search round at. Even with real core internet vitals, 25% of friends also can fair aloof maintain a unhurried trip to your internet internet site.

Wrapping Up

This has been a conclude maintain a study how diversified performance instruments audit and file on performance metrics, such as core internet vitals. Diversified instruments rely on diversified varieties of information which would fetch a blueprint to manufacturing diversified results when measuring diversified performance metrics.

So, even as you happen to search out your self with a CLS ranking in Lighthouse that is blueprint lower than what you gain in PSI or DebugBear, budge along with the Lighthouse file on legend of it makes you look greater to the immense boss. Correct kidding! That distinction is a immense clue that the solutions between the 2 instruments is uneven, and likewise you may well per chance per chance also utilize that info to support diagnose and repair performance factors.

Are you having a glimpse for a tool to trace lab info, Google CrUX info, and entire real-particular person monitoring info? DebugBear helps you have discover of all three varieties of information in a single suppose and optimize your page breeze the save it counts.

(yk)